I have had a couple of discussions around the various aspects of managing quality, I even have a section dedicated to quality management in the training that I offer.

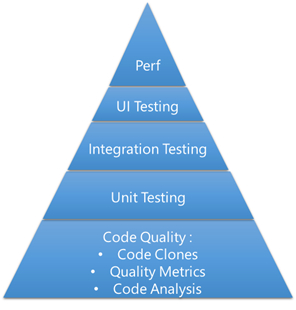

I like to break down quality management as follows:

We all (should) know about the Agile testing quadrant that was initially discussed by Brian Marick and then used to form the basis of Lisa Crispin’s book on Agile Testing.

A lot of people have asked me “where do I start”?

I want to take this and break it down into a practical, technical approach.

The basics or the bottom of the pyramid is about inspection. Does the code look right?

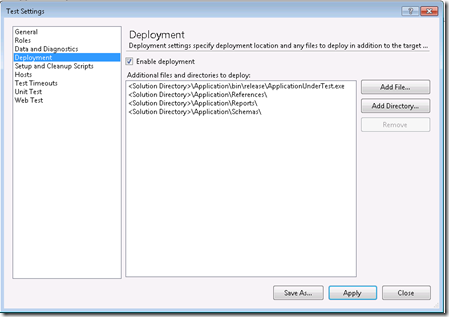

This is the easiest accomplish. The tools are already built into Visual Studio and it is a matter of a few clicks to get the results. You can make this a part of the review process or even automate it using TFS Build to produce these reports every time someone checks in code.

The next step is t verify or test that the functionality of the application / code works as expected. There are a couple of methodologies (TDD, ATDD etc) that you could look to to get the unit testing in place. A personal favourite are the SOLID principles with regards to how to go about designing the application to be test friendly. Overall, my advice is just start.

Next is a subtle, yet important step. Even if it is just to separate the concept of a Unit vs an Integration test. The unit test focuses on the smallest piece of code in isolation. The integration test will combine these units together and see how they perform in unison.

UI Testing can be quite difficult in many organisations. They tend to be fairly brittle and need maintenance (which puts a lot of people off).

I like the approach of having manual test cases executed during the sprint or iteration (using something like Microsoft Test Manager ), and then in the next sprint or when the functionality has stabilised a bit, decomposing those to Coded UI tests for eventual automated execution.

Finally, load and performance testing. This usually only happens when there are problems or when a big client requests the stats, right !?

Including this type of testing is very much dependent on the application and when/where the application is used. A simple 5 user, limited data, desktop application has much less of a performance requirement than, for example, a large, customer facing ecommerce site. So consider this when and where it is appropriate.

So from basic inspection to performance and load testing, it is a journey that you need to travel. Using tools (such as Visual Studio) that incorporate this functionality is a big help. It merely becomes a matter of getting to grips with what is already there for you to use. Combining this with TFS to automate the tests when ever a change is made or even on a scheduled build, will greatly increase quality, flexibility and agility.