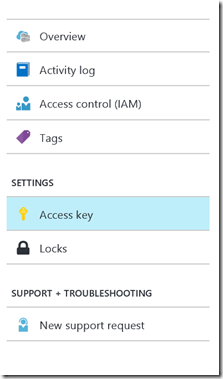

I’m actively involved in creating extensions on VSTS and one of the questions that comes up a lot is on Telemetry. Are people using the extensions, how are they using it and what about errors and exceptions? It has become such a topic of discussion that Will Smythe has actually gone ahead and given some guidance on how to add Application Insights (AppInsights) telemetry to your extension.

He gives a good overview and example of using AppInsights in a simple JavaScript (and in fact an html page) type application. Personally I prefer using TypeScript to do my JS development.

The method that Will explains and Typescript do not mix as seamlessly as I would like. Luckily there is hope.

Microsoft also provides a TypeScipt type definition for their AppInsights api. Currently it is in preview, but I have not had any problems with it.

You can install it via NPM ilke this:

Install-Package Microsoft.ApplicationInsights.TypeScript -Pre

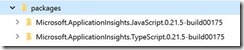

Once it is installed, it will dump the libraries into the packages folder in the root of the project. There should be two libraries, the JavaScript and the Typescript types.

Under the JavaScript folder (Microsoft.ApplicationInsights.JavaScript.0.21.5-build00175 in this case) you will find the scripts in the content\scripts folder. It has two versions, the full and minified version of the library. In our instance simply copy the minified version (ai.0.21.5-build00175.min.js in this instance) to the scripts folder of your extension.

The package should already have added the typescript definition file to your scripts folder, but in case it has not, under the TypeScript folder (Microsoft.ApplicationInsights.TypeScript.0.21.5-build00175 in this case) you will also find content\scripts folder that contains the type definition (ai.0.21.5-build00175.d.ts in this instance). Copy that to your TypeScript definitions folder.

Now you should be ready to use them. Include the JavaScript library in your extension and reference it in your html page, and add a reference to the TypeScript type definition in your TypeScript files.

/// <reference path="ai.0.21.5-build00175.d.ts" />

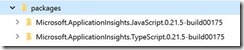

To use the library, you need to configure it using a configuration snippet. The configuration snippet contains the instrumentation key that has been setup (following Will’s post):

var snippet: any = {

config: {

instrumentationKey: "<<your instrumentation key>>"

}

};

You can pass this into the initialisation object :

var init = new Microsoft.ApplicationInsights.Initialization(snippet);

And then from the initialisation object you create an AppInsights instance:

var applicationInsights = init.loadAppInsights();

On the AppInsights instance you can go ahead and start to capture your telemetry using the following:

trackPageView(name?: string, url?: string, properties?: Object, measurements?: Object, duration?: number): void;

trackEvent(name: string, properties?: Object, measurements?: Object): void;

trackAjax(absoluteUrl: string, pathName: string, totalTime: number, success: boolean, resultCode: number): void;

trackException(exception: Error, handledAt?: string, properties?: Object, measurements?: Object): void;

trackMetric(name: string, average: number, sampleCount?: number, min?: number, max?: number, properties?: Object): void;

trackTrace(message: string, properties?: Object): void;

The full code would look something like this:

var snippet: any = {

config: {

instrumentationKey: "<<your instrumentation key>>"

}

}; var init = new Microsoft.ApplicationInsights.Initialization(snippet);

var applicationInsights = init.loadAppInsights();

applicationInsight.trackPageView("Index");

applicationInsight.trackEvent("PageLoad");

applicationInsight.trackMetric("LoadTime", timeMeasurement);

![TFS Basic_thumb[1] TFS Basic_thumb[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhwOm7Gb6CP-s-l0mYUJ41i8hrRlk9LnAIEJcrZQ0wWIrxOEZTVeSzXFrCDqULZ5JhapwkWC3U4PFTsTJp6ui_PMQdw5br6h3-LPMDFsvgLwVfU1xNSNRBw4qZK6qY7g5K4T3nQoLLv2FQ/?imgmax=800)