When you start dealing with docker you will notice a bunch of terminology being thrown at you. It is a good idea to at least skim the

documentation and get a basic understanding about these terms.

That said, we are going to simply go through a bunch of steps which should give a basic understanding. Here goes…

If you

have your application ready to containerize, then the next thing you need is a dockerfile. The dockerfile is basically a setup file for your container.

Note: If you have the docker extensions installed in VS Code, then you can open the folder with your sample project in, type “CTR+Shift+P” and the start typing docker. Select the “Add docker files to workspace” option and provide values for the prompts. This will generate a template for you:

Lets create a dockerfile by simply creating a new file and naming it "dockerfile".

For the contents we will start with something simple like this:

FROM microsoft/dotnet

WORKDIR /app

ENV ASPNETCORE_URLS http://*:5000

EXPOSE 5000

COPY ./output /app

ENTRYPOINT ["dotnet","docker_sample.dll"]

Lets break this down:

FROM microsoft/dotnet This is basically saying that, if you look at the docker repository there is a image by the name of "

microsoft/dotnet". I want that one as my base image. We can be more specific and add a tag (example “microsoft/dotnet:1.0-runtime” and it will get that one, or in our case it will just get the latest image available. In fact it is the same as saying “microsoft/dotnet:latest”.

WORKDIR /appThis is the working folder inside the container.

ENV ASPNETCORE_URLS http://*:5000 Here we are explicitly setting an environment variable in the container for your web app to use.

EXPOSE 5000When creating a containers, you can see it as a "closed system". The only way to expose things is to punch holes though a "firewall". Here we are saying, I have an application in the container and it is accessible through port 5000, so I want port 5000 open to the world.

For default web sites you may have port 80 etc., but without this, you wont be able to access your application. once you have “exposed” the port, you still need to map to it via your host.

COPY ./output /app This is where we are busy "populating" the container. This simply states that, from the current directory that I'm in (on my machine), I want to copy everything from the "output" folder to the "app" folder inside the container.

ENTRYPOINT ["dotnet","docker_sample.dll"] Finally, when the container is started, this is the entry point. This will simply execute "dotnet docker_sample.dll" when the container is started.

If you have followed the previous post <<link>> you should not be able to open a PowerShell shell, and in the folder where your application and dockerfile are in, type:

docker build . –t dotnet_sample --rm

If this is the first time you are running this, you will notice it starting to download a bunch of images, once that is done, you may see something like this:

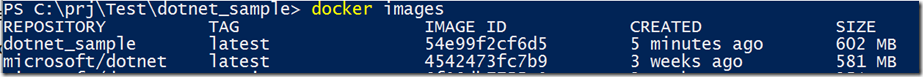

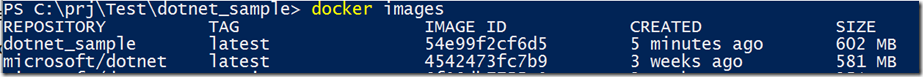

if you type

docker images now, you will see a list of images that has been downloaded to your machine and a new one named "dotnet_sample":

Next comes the fun part, lets run it…

docker run dotnet_sample –p 80:5000

If you are lucky you should see something like this:

Now we have a running container, but how do we access it. Notice the text that says "Now listening on:

http://*:5000"? Navigate to

http://localhost:5000 … oops, not accessible? Remember that this is not running "on your machine", it is running in a container. The

-p 80:5000 parameter that we passed basically says, let’s cross the boundary and map the docker host's (your machine) port 80 to port 5000 in the container. Now navigate to

http://localhost/ . See something familiar ?

Open another PowerShell shell and then type

docker ps

you will see the running images on your machine (hopefully you have at least one):

So what have you done?

- We created an application in the previous post,

- added a dockerfile

- built the docker image from the dockerfile and finally

- run the image

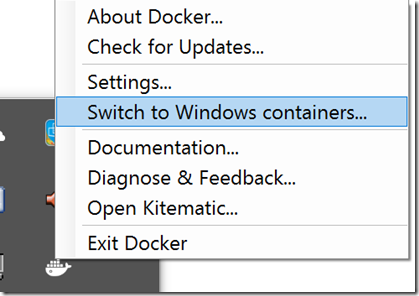

It may be worth mentioning at this juncture that this is actually a Linux instance, and we are running a dotnet core web application in the Linux image via your windows host. Is this a crazy world or what?