In the previous post I was discussing how one could go about packaging software to make the long journey from development into production.

In this post I will take a brief look at a couple of tools or applications that I have come across, to take those packages and automate their deployment. Using them will lower the friction and reduce the reliance on human (and possibly problematic) intervention.

Continuous Integration

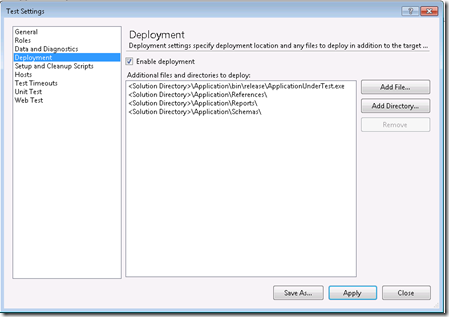

Once again, we all know that continuous integration is a “basic right” when it comes to development environments, but it does not need to be limited to development environments. If you are using one of the numerous CI environments, extending it to deploy the packages in the previous post should be fairly simple.

I have done this a couple of times to varying degrees of complexity in TFS. It is possible to alter the Build Template to do pretty much anything you require. Setting up default deployment mechanisms and then by simply changing a few parameters, you can point it to different environments.

I have done everything from database deployments, remote msi installations to SharePoint deployments using just the TFS Build to do all the work.

3rd Party Deployment Agents

InRelease

You must have heard by now, a very exiting acquisition from Microsoft was the InRelease application from inCycle. This basically extends TFS Build and adds a deployment workflow. It takes the build output (which could be anything that was discussed in the previous post) and, once again, kicks off a WorkFlow that includes everything from environment configuration to authorisation of deployment steps.

In SAP they speak of “Transports” between environments, and this, in my mind, speaks to the same idea of transporting the package into different environment.

I’m really excited about this, and I can already see a couple of my clients making extensive use of it.

Octopus Deploy

Another deployment focussed package that I have been following is Octopus Deploy (OD). OD works on the same premise as InRelease, having agents/deployers/tentacles in the deployment environment that “does the actual work”.

A key differentiator is that OD sources updates etc. from NuGet feeds, so you need to package your deliverables and then post them to a NuGet server. As I explained in the previous post, NuGet is a very capable platform, and with a number of free NuGet servers around, you can very easily create your “private” environment for package deployment.

System Center

Do not forget System Center, or more specifically System Center Configuration Manager (SCCM). SCCM is a great way to push or deploy applications (generally MSI’s) to different servers or environments. Very capable in its own right, and more importantly (assuming you have packaged the software properly) can be setup, configured and managed by the ops team.