Let me give you a hint: Not only is it faster, it’s also more reliable! (There, blog post done : )

Let me expand on the above:

It’s fast!

Seriously, a lot faster.

Anybody that’s ever had to sit and pay VSS tax while dreaming of your post work beer, waiting for a history lookup, a search, or especially “View difference” would know what I mean.

There is a great difference in architecture between the two. I’ll discuss a few to give you an idea of why you should consider moving.

Storage:

TFS Uses a SQL database to store Team Project Collections; VSS uses a File System. So how is this better?

· Size – (Yes it does matter) VSS can store up to 4GB reliably; TFS can go into Terabytes

· Reliable – Ever had a network error when checking in on VSS? You’re left with corrupt files and a caffeine overdose. TFS commits a transaction which can be rolled back if there is an error

· Indexing on the tables so faster searches – Did I mention TFS is faster?

· And of course, having a DB as your data store, you can have all the usual goodies like mirrored and clustered DB’s for TFS, so you never have to lose anything or have any down time!

Networking:

TFS uses HTTP services vs. file shares (That should be enough said)

· Option of a TFS proxy for remote sites to save bandwidth and speed things up a little

· Did I mention that TFS is faster?

Security:

TFS uses Windows Role-Based Security vs. VSS security (I don’t think the methodology was good enough for someone to even come up with a name for it – I’ll just call it Stup-Id, there we go, you’re welcome ;)

Windows Role-Based Security vs. VSS’s Stup-Id:

· With Win Roles you can specify who’s allowed to view, check-out, check-in and lots more. With Stup-Id you can set rights per project, but all users must have the same rights for the database folder. This means all users can access and completely muck up the shared folders. Not pretty.

Extra functionality and pure awesomeness:

· Shelve sets – this is really handy to store code if you don’t want to commit it just yet. Say you go for lunch and you’re afraid that BigDog might chew up your hard drive again: all you do is shelve your code – this stores it in TFS. Once you’ve replaced said eaten hard drive you just unshelve and... tada! No need to say the dog ate my homework.

· Code review – Developer A can request a code review from another developer who can add comments to the code and send it back. (Basically sharing a shelve set)

· Gated check-ins: You can set rules to only allow check-ins when certain conditions have been met. For example, only check in code when:

o the code builds successfully, or

o all unit tests have passed, or

o the code has been reviewed

· Work Items – Bug/issue tracking made with love removes that nagging feeling at the back of your mind that one of these days there will be a PHP or MySQL update that breaks your free open source ticketing/bug tracking system.

· Change sets – basically all the items that you’ve changed and are checking in. You can also associate change sets with work items for better issue tracking.

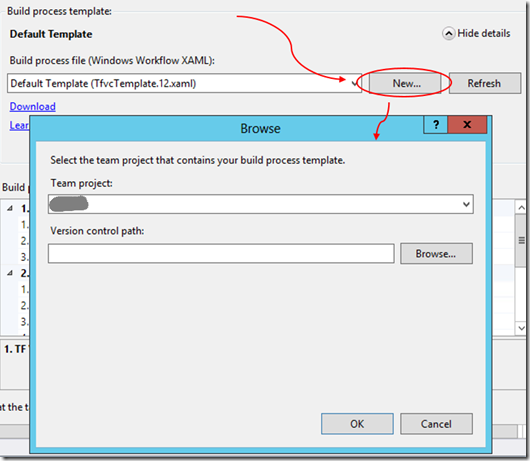

· Build automation – automate build and deploys (How cool is this?)

But for me the Pièce de résistance is:

Have you ever had a new developer change files outside of the IDE? Maybe change the read-only attribute and made some changes? This completely confuses VSS and is a great way to get your source control out of sync. In TFS you can edit files outside of the IDE to hearts content and TFS will pick it up and queue for the next commit.

How to move?

Google “Visual Source Safe Upgrade Tool for Team Foundation Server” and follow the instructions.

And that is why TFS will make you happy. Better source control means better code quality, leading to happy customers, and maybe being the next Bill Gates (unless you wanted to be Guy Fawkes).